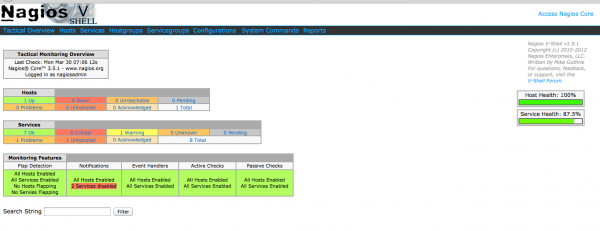

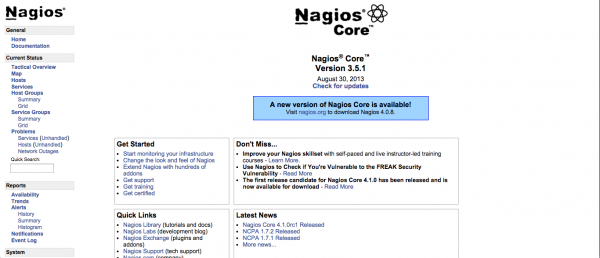

If you have setup your nagios on your server and you want to install vshell so that it looks better, just follow the guide here!

Download vShell

First thing you need to do is to download vshell

wget http://assets.nagios.com/downloads/exchange/nagiosvshell/vshell.tar.gz

you can do it anywhere as long as gets download

Install vShell

Now you need to install vshell

tar -zxvf vshell.tar.gz cd vshell

Before you start installing, check your configuration

vi install.php

make sure that the path is correct

// ***********MODIFY THE DIRECTORY LOCATIONS BELOW TO MATCH YOUR NAGIOS INSTALL*********************

//target directory where vshell's web files will be stored

define('TARGETDIR',"/usr/local/vshell");

//target directory where your current apache configuration directory is located

define('APACHECONF',"/etc/httpd/conf.d");

//default for ubuntu/debian installs

//define('APACHECONF',"/etc/apache2/conf.d");

since i'm on centos and installed with apache, mine is by default correct! So all i did was installing this way

./install.php

And done! you can access your vshell similar to nagios by typing http://localhost/vshell

Important vShell path

There are a few things you need to know since everything above are quiet abstract.

/etc/httpd/conf.d/vshell.conf #apache vshell setup /etc/vshell.conf #vshell configuration

Now make sure that the vshell.conf file has the same as your nagios htpasswd.users or else you might not be able to login to vshell!

Troubleshooting

Once you done above, you might face a few problem like

Unable to login to vshell

Open up /etc/httpd/conf.d/vshell.conf and /etc/httpd/conf.d/nagios.conf

change your vshell.conf AuthUserFile to nagios.conf one

from

AuthUserFile /user/local/nagios/passwd

to

AuthUserFile /etc/nagios/passwd

above might vary but keep that in mind.

Unable to open '/usr/local/nagios/var/objects.cache' file!

Once you login you see the above error and you might want to open up the file /etc/vshell.conf and /etc/nagios/nagios.cfg and change vshell.conf from

; Full filesystem path to the Nagios object cache file OBJECTSFILE = "/usr/local/nagios/var/objects.cache"

to

; Full filesystem path to the Nagios object cache file OBJECTSFILE = "/var/log/nagios/objects.cache"

where the correct value should be the same as nagios one

Unable to open '/usr/local/nagios/var/status.dat' file!

Once you login you see the above error and you might want to open up the file /etc/vshell.conf and /etc/nagios/nagios.cfg and change vshell.conf from

; Full filesystem path to the Nagios status file STATUSFILE = "/usr/local/nagios/var/status.dat"

to

; Full filesystem path to the Nagios status file STATUSFILE = "/var/log/nagios/status.dat"

where the correct value should be the same as nagios one

Unable to open '/usr/local/nagios/etc/cgi.cfg' file!

Once you login you see the above error and you might want to open up the file /etc/vshell.conf and /etc/nagios/nagios.cfg and change vshell.conf from

; Full filesystem path to the Nagios CGI permissions configuration file CGICFG = "/usr/local/nagios/etc/cgi.cfg"

to

; Full filesystem path to the Nagios CGI permissions configuration file CGICFG = "/etc/nagios/cgi.cfg"

where the correct value should be the same as nagios one